The words 3D and "holographic images" are heard by everyone. From the release of Panasonic's first 3D TV system in 2010 to the present virtual reality and augmented reality technology, these words have been integrated into our popular culture and become more and more the focus of our attention. After all, the real world is three-dimensional, why should we limit our experience to a flat screen?

The transition from 2D to 3D is a natural process, as in the 1950s, black and white movies and black and white TVs turned into color. But in many ways, the impact from 2D to 3D may be even greater.

The significance of 3D is not just to present a more credible real world, but to turn the digital world into a part of real life, making every detail of it as real as the real world.

This coming watershed will have a profound impact on how we work, learn, socialize and entertain. We will be able to break through the physical limitations of the real world, how big our imagination is, and how big the world is.

Of course, this shift will also affect the devices we use and how we interact with the machines. That's why companies like Google, Facebook and Apple are grabbing the 3D market as quickly as possible: Whoever wins the 3D war can control the next generation of user interaction.

But now this is just a flaw. Although some people have tried to open the 3D market before, we still have to rely on narrow screens to enter the electronic world. why? Because there are many shortcomings in the current 3D, the current level of technology is not enough to achieve a truly credible world, so consumers continue to wait.

Next, YiViAn wants to introduce you to 3D technology from a user's point of view, the challenges that 3D products such as VR/AR head and naked eye 3D display are facing, and how these products will become an intuitive interface between us and the digital world. .

Why do we need a 3D picture?

Before diving into 3D technology, we need to understand the principles of this technology.

You can try to wear a pair of glasses to wear a needle, you will find this more difficult than you think. Evolution has changed human perception of 3D, allowing us to grasp real-world information faster and more accurately.

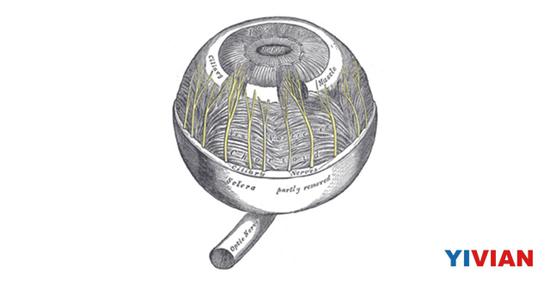

How do we acquire depth perception at the physiological level? This is a complicated subject. The human eye and brain can feel a lot of depth hints. When these hints appear in the real world, they will strengthen each other and form a clear 3D environment image in our minds.

Stereo vision (binocular parallax)

If we want to use a 3D illusion to deceive the visual system and the brain, we may not be able to realistically reproduce all the depth cues, so the resulting 3D experience will be biased. Therefore, we need to understand some of the main depth hints.

Whether you believe it or not, the things you see in your two eyes are different. They look at the angle of the object will be slightly different, so the image obtained by the retina will also be different.

When we look at objects in the distance, the parallax between the left and right eyes will be smaller. However, when the object is closer, the parallax will increase. This difference allows the brain to measure and "feel" the distance to the object, triggering the perception of depth information.

Most of the 3D technology now relies on stereo vision to deceive the brain and convince the brain that it feels deep information. These techniques will present different images to both eyes. If you have seen 3D movies (such as "Avatar" and "Star Wars"), then you should have experienced this effect through 3D glasses.

More advanced "autostereoscopic" display technology can project different images into space in different directions so that the eyes can receive different images without wearing glasses.

Stereo vision is the most obvious depth hint, but it's not the only clue. Humans can feel the depth through only one eye.

Close one eye and place your index finger in front of the other eye to stay still. Now move the head up and down and left and right. At this point you will see the background seems to be moving relative to the fingers. More precisely, it seems that your fingers move faster than the background.

This phenomenon is called motion parallax, which is why you can feel depth with one eye. Motion parallax is a very important effect if you want to provide a realistic 3D experience, because the relative displacement of the viewer and the screen is very slight.

Stereoscopic vision without motion parallax still allows you to feel the depth, but this 3D image will be distorted. For example, a building in a 3D map will start to distort, and the background object will appear to be deliberately obscured by the foreground object, which would be quite uncomfortable if you look closely.

The motion parallax is closely related to our visual perception - in fact, the near-big and small effects we see are a kind of depth hint.

Even if you are sitting completely still (no motion parallax), then close one eye (no stereo vision) so you can still distinguish distant and nearby objects.

Then try the above finger experiment, put your index finger in front of your eyes, and then focus on the finger. As the glasses focus, you will notice that the background will become blurred. Now put your focus on the background and you will notice that your fingers are blurred and the background becomes clear. This works the same way as modern cameras, and our eyes have the ability to change focus.

The eye changes the shape of the lens by contraction of the ciliary muscle to achieve the zoom function.

So how does the ciliary muscle of the eye know how much strength should be used to contract? The answer is that our brain has a feedback loop where the ciliary muscles continually contract and relax until the brain gets the sharpest image. This is actually an instant action, but if the ciliary muscles are adjusted too often, our eyes will feel very tired.

Only an object within a distance of two meters can trigger the movement of the ciliary muscle. Above this distance, the glasses will begin to relax and focus on infinity.

Conflict between visual convergence and visual adjustment

When your eyes are focused on a nearby point, they actually rotate in the eyelids. When the line of sight is concentrated, the extraocular muscles will automatically stretch, and the brain can feel the movement and treat it as a depth hint. If you focus on an object within 10 meters, you will feel the convergence of the eyeball.

So when we look at the world with two eyes, we use two different sets of muscles. One set of muscles is responsible for concentrating (convergence) the eyes to the same focus, while the other set of muscles is responsible for regulating the sharpness of the retinal imaging. If the eye is not properly converged, then we will see a double image. If the visual adjustment is not good, then we will see blurred images.

In the real world, visual convergence and visual adjustment are complementary. In fact, the nerves that trigger these two reactions are connected.

But when watching 3D, virtual reality, or augmented reality content, we usually focus on a specific location (such as the screen of a 3D theater), but the information received by both eyes will allow the eyes to converge to another distance (for example, from The dragon that rushed out of the screen).

At this time, our brain will have difficulty coordinating the signals of these two conflicts. This is why some viewers feel tired or even disgusted when watching 3D movies.

Do you remember the red and blue stereo glasses made of cardboard? This "filtered glasses" allows the left eye to see only red light and the right eye only to see blue light. A typical stereo image overlays a red image on the left eye and a blue image on the right eye.

When we look through the filter glasses, the visual cortex of the brain fuses the images we see into a three-dimensional scene, and the color of the image is corrected.

Now all 3D effects based on glasses, including virtual reality and enhanced heads, use this principle of work - physically separating the images seen by the left and right eyes to create stereo vision.

When you go to the movies, you will get a pair of 3D glasses that look completely different from polarized glasses. Such glasses do not filter the image according to the color, but filter the image according to the polarization of the light.

We can think of photons as a vibrating entity that vibrates horizontally or vertically.

On a movie screen, a special projector produces two images that overlap each other. One of the images will only emit horizontally vibrating photons to the viewer, while the other will send vertically vibrating photons. The polarized lenses of the glasses ensure that the two images can reach the respective eyes.

If you have a pair of polarized glasses (don't steal it from the cinema), you can use it to watch the polarization of the real world. Keep this pair of glasses at a distance of 2 feet from your line of sight and use it to watch the car's windshield or water surface. As you rotate the glasses 90 degrees, you should see the appearance and disappearance of glare through the lens. This is how the polarizer works.

The polarizer allows all color chromatograms to enter the eye, and the quality of the sub 3D image is enhanced.

From the perspective of 3D image quality, this kind of glasses can only provide a deep hint of stereo vision. So even though you can see the depth of the image, if you leave your seat and walk around the cinema, you will not see the surrounding objects, and the background will move in the opposite direction of the motion parallax, just like you will follow you. .

A further serious problem is the lack of visual adjustment support for such glasses, which can lead to conflicts between visual convergence and adjustment. If you stare at a dragon and approach you from the screen, you will soon feel extreme visual discomfort.

That's why the dragon in the movie will only fly very fast - just to achieve a frightening effect while avoiding discomfort in your eyes.

If you have a 3D TV in your home, the matching glasses may not be of the polarized type, but the technology of "active shutter". This type of television alternately displays images of the right and left eyes, and the glasses simultaneously block the corresponding eyes according to the frequency of the television. If the frequency of switching is fast enough, the brain can mix the two signals into a coherent 3D image.

Why not use polarized glasses? Although some TVs use this technology, polarized images in different directions must come from different pixels, so the resolution of the image seen by the viewer will be halved. (But the two images in the cinema are projected on the screen separately, and they can overlap each other, so it doesn't cause a drop in resolution.)

Active shutter type 3D glasses generally only support a depth hint of stereo images. Some of the more advanced systems use tracking technology to tweak content and support motion parallax by tracking the viewer's head, but only for one viewer.

Virtual reality is a new type of 3D rendering. This technology has recently received widespread attention. A lot of people have experienced virtual reality, and more people will be able to experience it in the next few years. So, what is the working principle of virtual reality?

The VR head display does not work by filtering the content from the external screen, but generates its own binocular image and presents it directly to the corresponding eye. The VR head display usually contains two microdisplays (one for the left eye and one for the right eye), and their actual avatars are magnified and adjusted by the optical components and displayed at a specific position in front of the user's eyes.

If the resolution of the display is high enough, the magnification of the image will be higher, so that the user will see a wider field of view, and the immersion experience will be better.

Now virtual reality systems like the Oculus Rift track the user's position and add motion parallax to stereo vision.

The current version of the virtual reality system does not support visual adjustment and is prone to conflicts between visual convergence and adjustment. In order to ensure the user experience, this technical problem must be solved. These systems are now unable to fully solve the problem of eyeglass movements (limited range and distortion), but this problem may be solved by eye tracking technology in the future.

Similarly, augmented reality heads are becoming more common. Augmented reality technology can blend the digital world with the real world.

To ensure realism, augmented reality systems not only need to track the user's head movements in the real world, but also consider the reality of the 3D environment in which they are located.

If the recent reports by Hololens and Magic Leap are true, the augmented reality field has now made a huge leap.

Just as the world we see in our two eyes will be different, the light of the real world also enters the pupil from different directions. In order to trigger visual adjustment, the near-eye display must be able to simulate light that is emitted independently from all directions. This type of optical signal is called the light field, which is the key to the future of virtual reality and augmented reality products.

Before we can implement this technology, we can only continue to suffer from headaches.

These are the challenges that head-mounted devices need to overcome. Another problem they face is integration into society and culture (such as the Google Glass experience). If you want to display the world in multiple perspectives at the same time, we need to use the naked eye 3D display system.

How can we experience 3D images without wearing any equipment?

Seeing this, I think you should understand that if you want to provide stereo vision, 3D screens must project image content from different perspectives in different spatial directions. In this way, the viewer's left and right eyes will naturally see different images, triggering depth perception, so this system is called "autostereoscopic display."

Because 3D images are projected on the screen, the autostereoscopic display itself can support visual convergence and visual adjustment as long as the 3D effect is not too exaggerated.

But this does not mean that this system will not cause eye discomfort. In fact, this system has another problem in the conversion of different visual areas.

The images of such systems will have frequent jumps, brightness changes, dark bands, stereoscopic interruptions, etc. The worst thing is that the content seen by the left and right eyes is reversed, resulting in a completely opposite 3D experience.

So how should we solve these problems?

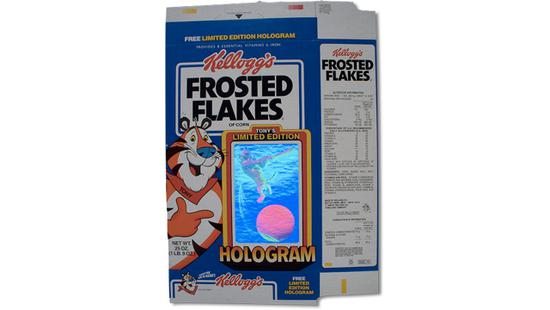

3M started commercial production of this technology in 2009.

The prism film will be inserted into the thin film stack of the normal LCD screen backlight, which can be illuminated by light from one of the left and right directions. When the light source is from the left, the image of the LCD will be projected on the right side. When the light source is from the right, the image will be projected in the left area.

Quickly switch the direction of the light source and change the left and right eyes to see the content on the LCD at the same frequency, which can produce stereo vision, but only if the screen needs to be placed directly in front of the viewer. If you look through other perspectives, the stereo effect will disappear and the image will become flat.

Since the range of viewing is very limited, this display technology is referred to as "2.5D".

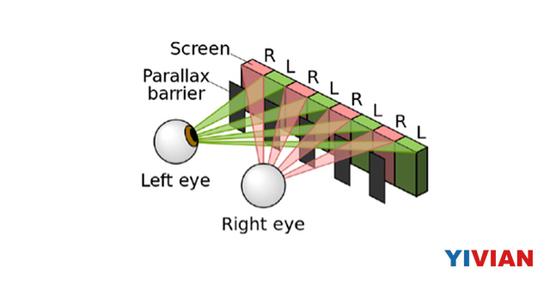

Adding a cover layer with a lot of small openings on top of a 2D screen, we can't see all the pixels below when we look at the screen through these small openings. The pixels we can see actually depend on the viewing angle, and the observer's left and right eyes may see different sets of pixels.

The concept of “parallax barrier†was discovered a century ago, and Sharp first invested it in commercial applications a decade ago.

Improved, this technology now uses a switchable barrier, which is actually another active screen layer that can create a barrier effect or transparent, restore the screen to 2D mode to show the full resolution .

As early as 2011, HTC EVO 3D and LG OpTImus 3D have made headlines because they are the world's first smartphones to support 3D. But they are just another example of 2.5D technology that only provides 3D effects in a very narrow range of perspectives.

Technically, the parallax barrier can be continuously expanded to form a wider viewing angle. But the problem is that the wider the angle of view, the more light you need to be shielded, which can cause excessive power consumption, especially for mobile devices.

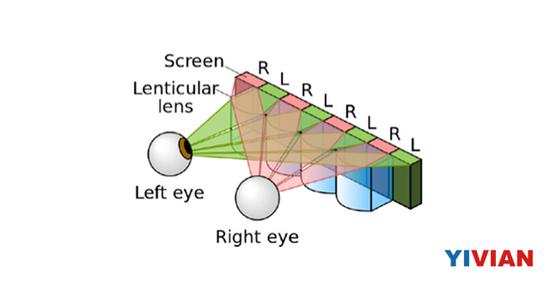

Covering a 2D screen with a layer of micro-convex lens, we all know that the convex lens can focus on the parallel light from a distant source, and the child uses the principle of using a magnifying glass to point things.

These lenses collect light from the screen pixels and transform it into a beam with a direction. We call this phenomenon collimTIon.

The direction of the beam changes with the pixel position below the lens. In this case, different pixels will follow the beam to a different direction. The lenticular lens technique ultimately achieves the same effect as the parallax barrier (both using different pixel groups seen at different spatial locations), except that the lenticular lens does not block any light.

So why don't we see a lenticular 3D screen on the market?

This is not because no one tried, Toshiba released its first generation system in Japan in 2011. However, this kind of screen will have some unacceptable visual artifacts when it is carefully watched. This is mainly caused by the lens.

First, screen pixels are usually composed of a smaller area of ​​the emitter and a larger "black matrix" that is not illuminated. After processing through the lens, the emitter and black matrix of a single pixel are deflected into different directions of space. This will result in a very noticeable black area on the 3D image. The only way to solve this problem is to "defocus" the lens, but doing so can cause interference between different viewing angles and the image will become blurred.

Secondly, it is difficult to achieve proper collimation at a wide viewing angle with only a single lens. This is why the camera lens and microscope use a compound lens instead of a single lens. Therefore, the lenticular lens system can only observe true motion parallax at a narrow viewing angle (about 20 degrees). Beyond this range, the 3D image will repeat continuously, and it feels like the angle of view is not the same, and the image will become more and more blurred.

A narrow field of view and poor visual conversion are the biggest drawbacks of lenticular screens. For television systems, the current lenticular lens technique is acceptable if the viewer automatically adjusts their heads and does not walk around.

However, in the use scenes such as mobile phones and automobiles, the head is sure to move, so that the cylindrical lens system is difficult to achieve the desired effect.

So how do we design a naked-eye 3D vision system with a wide field of view and smooth transition?

If I know the relative position between your eyes and the screen, I can calculate the corresponding angle of view and try to adjust the image to the orientation of the eye. As long as I can quickly detect the position of your eyes and have the same fast image adjustment mechanism, I can ensure that you can see stereo vision and smooth motion parallax rendering from any angle of view.

This is how the eye tracking autostereoscopic screen works.

The advantage of this method is that the screen only needs to render the two angles of view at any time, so that most of the screen pixels can be maintained. From a practical point of view, the eye tracking system can be used in conjunction with current parallax barrier technology to avoid optical artifacts produced by the lenticular lens system.

But eye tracking is not a panacea. First, it can only support one viewer at a time, and eye tracking requires the device to configure additional cameras and continuous running of sophisticated software for predicting eye position in the background.

Size and power consumption are not a big issue for TV systems, but they can have a huge impact on mobile devices.

In addition, even the best eye tracking systems can experience delays or errors. Common causes include changes in lighting, eyes blocked by hair or glasses, cameras detecting another pair of eyes, or the viewer's head moving too fast. . When there is an error in this system, the viewer will see a very uncomfortable visual effect.

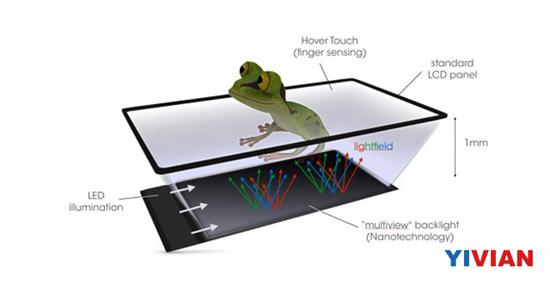

The latest development in naked-eye 3D technology is the diffractive "multi-view" backlit LCD screen. Diffraction is a property of light. When light encounters submicron objects, it will deflect, so this means we are ready to enter the field of nanotechnology.

You are not mistaken, it is nanotechnology.

A normal LCD screen backlight emits randomly distributed light, that is, each LCD pixel emits light into a wide space. However, the diffractive backlight can emit light in a uniform direction (light field), and it can set a certain pixel of the LCD to emit light in one direction. In this way, different pixels can send their own signals in different directions.

Like the lenticular lens, the method of diffractive backlight can also make full use of the incident light. But unlike lenticular lenses, the diffractive backlight can handle both small and large light emission angles and fully control the angular spread of each viewing angle. If the design is reasonable, there will be no dark areas between the angles of view, and the resulting 3D image will be as clear as the parallax barrier.

Another feature of the diffraction mode is that the light adjustment function does not affect the light that passes directly through the screen.

This way the transparency of the screen can be completely preserved, so this screen can be added below to add a normal backlight and return to full-pixel 2D mode. This paved the way for the development of perspective naked-eye 3D display technology.

The main problem with diffraction methods is the consistency of colors. Diffractive structures typically emit light of different colors into different directions, and this dispersion phenomenon needs to be cancelled at the system level.

However, the development of 3D technology will not only stop at seeing 3D images, it will open up a whole new paradigm of user interaction.

The 3D experience will soon reach the quality of consumption, and we will not be worried that our 3D experience will be interrupted.

In virtual reality and enhanced display systems, this means increasing the field of view, supporting visual adjustments, and increasing the responsiveness of the system to rapid head movements.

In the naked-eye 3D display technology, this means providing the user with sufficient free moving space and avoiding various visual artifacts such as 3D loss, dark areas, visual jumps, or motion parallax delays.

Once the illusion becomes authentic, we forget the technology behind the illusion and treat the virtual reality world as the real world, at least until we hit the real wall. If we want to achieve such an illusion, we need to consider that the real world has a physical reaction.

When we transform electronic data into light signals that we can perceive in the real world, we need to send the body's real reaction data back to the digital world to interact.

In virtual reality and augmented reality headings, this can be achieved by sensors and cameras that the user wears or places in the surrounding environment. We can foresee smart gadgets with sensors in the future, but they can be cumbersome.

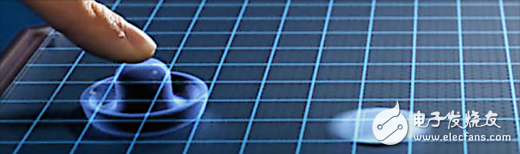

On a 3D screen, this task can be done directly from the screen. In fact, the Hover Touch technology developed by SynapTIcs has been able to achieve non-touch finger sensing. Soon, we only need to move our fingers to interact with the holographic image in midair.

Once the electronic world understands the user's reaction mechanism. Then the two can be more naturally integrated. In other words, the digital signal can open a door on it before we hit a wall.

But is it better if you can see the wall in the virtual world? But how do we bring touch into the world?

This question is related to the field of tactile feedback. If you have turned on the vibration mode of your phone, you should have experienced this feedback.

For example, a glove and other clothing with a vibration unit, or use a weak electrical signal to simulate the skin, if adjusted, different parts of your body will be able to feel the touch of the visual effect.

Of course, not everyone is suitable to wear clothes that are covered with wires or to feel current.

For screen-based devices, the ultrasonic touch allows you to touch the screen directly in the air without using any smart clothing. This technology sends ultrasonic waves from around the screen. The intensity of these ultrasonic waves can be adjusted according to the user's finger movements.

Believe it or not, these enhanced sound waves are strong enough to sense your skin. Companies like UltrahapTIcs are already preparing to bring this technology to market.

While today's virtual reality and enhanced display are becoming more common, they still have many limitations in terms of mobility and socialization, making them difficult to implement a fully interactive 3D experience. A 3D screen using finger touch technology will overcome this obstacle and allow us to interact with the digital world in a more direct way in the future.

In a recent blog post, I referred to this platform as Holographic Reality and described how holographic reality displays can be applied in every aspect of our daily lives. All windows, tables, walls and doors of the future will have holographic reality. The communication devices we use in offices, homes, cars and even public places will carry holographic reality components. We will be able to access the virtual world anytime, anywhere, without having to wear a head-up or cable.

In the past five years, 3D technology, display technology and head display have seen great development.

With such rapid technological development, it is not difficult to imagine that in the next five years we will communicate, learn, work, shop or entertain in a world of panoramic reality, which will be advanced 3D through products such as head-up and 3D screens. The ecosystem is implemented.

GreenTouch's self-developed open frame and desktop touch all-in-one computers can support Windows, Linux, and Android operating systems, with excellent functionality and flexibility, and provide you with reliable industrial or commercial-level solutions for your industry. Automated system integration realizes simple human-computer interaction or seamlessly connects your customers from mobile devices to in-store interactive experiences. They adopts modular design, versatile and powerful, and can be used in public environments such as in-store human-computer interaction, operating system cashiers, self-service, hotel services, and corporate office. they have a wide range of uses, provides a variety of sizes and configuration options, and has cross-environmental use the versatility, sturdiness and durability, can meet the needs of continuous public use.

Touch All In One PC,Touch Computer, Touch All In One Computer,Touch All-in-one PC,Touch Screen Computer,Touchscreen Computer Display

ShenZhen GreenTouch Technology Co.,Ltd , https://www.bbstouch.com