Deep learning is probably the hottest topic in this decade and even in the coming decades. Although deep learning is well known, it does not only include mathematics, modeling, learning, and optimization. Algorithms must run on optimized hardware because it can take up to several weeks to learn thousands of data. Therefore, deep learning networks require faster, more efficient hardware.

As we all know, not all processes can run efficiently on the CPU. Game and video processing require specialized hardware, the graphics processing unit (GPU), and signal processing requires other independent architectures such as digital signal processors (DSPs). People have been designing dedicated hardware for learning. For example, in March 2016, the AlphaGo computer with Li Shishi used a distributed computing module consisting of 1920 CPUs and 280 GPUs. With NVIDIA releasing a new generation of Pascal GPUs, people have begun to pay equal attention to deep learning software and hardware. Next, let's focus on the hardware architecture of deep learning.

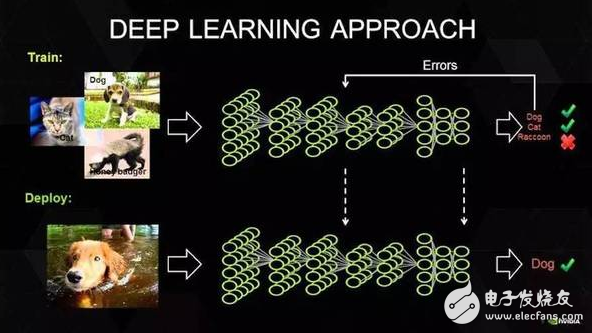

Requirements for deep learning hardware platformsTo understand what hardware we need, we must understand how deep learning works. First on the surface, we have a huge data set and we have chosen a deep learning model. Each model has some internal parameters that need to be adjusted to learn the data. And this kind of parameter adjustment can actually be attributed to the optimization problem. When adjusting these parameters, it is equivalent to optimizing the specific constraints.

Photo: Nvidia

Baidu's Silicon Valley Artificial Intelligence Lab (SVAIL) has proposed the DeepBench benchmark for deep learning hardware, which focuses on the hardware performance of basic computing rather than the performance of the learning model. This approach aims to find bottlenecks that make calculations slow or inefficient. Therefore, the focus is on designing an architecture that performs best for the basic operations of deep neural network training. So what are the basic operations? The current deep learning algorithms mainly include Convolutional Neural Network (CNN) and Recurrent Neural Network (RNN). Based on these algorithms, DeepBench proposes the following four basic operations:

Matrix Multiplication (Matrix MulTIplicaTIon) - Almost all deep learning models include this operation, which is computationally intensive.

ConvoluTIon - This is another commonly used operation that takes up most of the floating-point operations per second (floating point/second) in the model.

Recurrent Layers - The feedback layer in the model, and is basically a combination of the first two operations.

All Reduce - This is a sequence of operations that pass or parse the learned parameters before optimization. This is especially effective when performing synchronous optimizations on deep learning networks that are distributed across hardware, such as the AlphaGo example.

In addition, deep learning hardware accelerators require data level and process parallelism, multi-threading and high memory bandwidth. In addition, due to the long training time of the data, the hardware architecture must have low power consumption. Therefore, Performance per Watt is one of the evaluation criteria for hardware architecture.

Current trends and future trends

NVIDIA's GPUs have been at the forefront of the deep learning hardware market. Photo: Nvidia

NVIDIA dominates the current deep learning market with its large-scale parallel GPU and dedicated GPU programming framework, CUDA. But more and more companies have developed accelerated hardware for deep learning, such as Google's TPU/Tensor Processing Unit, Intel's Xeon Phi Knight's Landing, and Qualcomm's neural network processor (NNU/ Neural Network Processor). Companies like Teradeep are now using FPGAs (field programmable gate arrays) because they are 10 times more energy efficient than GPUs. FPGAs are more flexible, scalable, and have higher performance-to-power ratios. But programming for FPGAs requires specific hardware knowledge, so there has recently been a development of FPGA-level programming models for software.

In addition, the widely accepted philosophy has been that a unified architecture for all models does not exist because different models require different hardware processing architectures. Researchers are working hard and hope that the widespread use of FPGAs can overturn this claim.

Most deep learning software frameworks (such as TensorFlow, Torch, Theano, CNTK) are open source, and Facebook has recently opened its Big Sur deep learning hardware platform, so in the near future, we should see more open source learning. Hardware architecture.

Piezoelectric Ceramic Actuator Plate,Piezoelectric Bending Ceramic,Piezo Ceramic Diaphragm

NINGBO SANCO ELECTRONICS CO., LTD. , https://www.sancobuzzer.com